LSC-Eval: A General Framework to Evaluate Methods for Assessing Dimensions of Lexical Semantic Change Using LLM-Generated Synthetic Data

Mar 11, 2025· ,,,,·

0 min read

,,,,·

0 min read

Naomi Baes

Raphaël Merx

Nick Haslam

Ekaterina Vylomova

Haim Dubossarsky

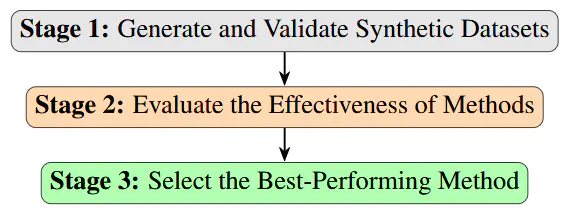

Stages of the Evaluation Framework.

Stages of the Evaluation Framework.Abstract

Lexical Semantic Change (LSC) provides insight into cultural and social dynamics. Yet, the validity of methods for measuring different kinds of LSC remains unestablished due to the absence of historical benchmark datasets. To address this gap, we propose LSC-Eval, a novel three-stage general-purpose evaluation framework to: (1) develop a scalable methodology for generating synthetic datasets that simulate theory-driven LSC using In-Context Learning and a lexical database; (2) use these datasets to evaluate the sensitivity of computational methods to synthetic change; and (3) assess their suitability for detecting change in specific dimensions and domains. We apply LSC-Eval to simulate changes along the Sentiment, Intensity, and Breadth (SIB) dimensions, as defined in the SIBling framework, using examples from psychology. We then evaluate the ability of selected methods to detect these controlled interventions. Our findings validate the use of synthetic benchmarks, demonstrate that tailored methods effectively detect changes along SIB dimensions, and reveal that a state-of-the-art LSC model faces challenges in detecting affective dimensions of LSC. LSC-Eval offers a valuable tool for dimension- and domain-specific benchmarking of LSC methods, with particular relevance to the social sciences.

Type

Publication

ACL Findings